Accelerate Success with AI-Powered Test Automation – Smarter, Faster, Flawless

Start free trialAutomated software testing significantly accelerates the testing process, thus making a direct positive impact on the fulfillment and quality of software. Its an excellent way to put your testing process on steroids. You program a tool to simulate human behavior in interacting with your software.

Once programmed, its similar to having many robotic helpers that you can create on the fly,that can execute the test cases resulting in massive scalability. This in turn causes a massive speed up in execution time.

Rather than using humans, automation uses test scripts to simulate the end user behaviour. Test scripts are developed using automation tools like selenium and execute the defined test steps. They then compare the actual results against the expected result. However automated software testing has its own limitations and drawbacks. One of the biggest drawbacks of automation are False Failures or False Fails. In this article, we will dig deeper into what are False Fails and how they can adversely affect the value of automation.

What are False Fails ?

Whenever an automation test suite is executed, the result is a pass or fail report. Pass or Fail depends on whether the actual result matches the expected result or not.

Failures can be of two types.

- True Fail, which means there is a defect in the system and it is not working as expected.

- False Fail, which means there may be no defect and the system may be working as expected. False Fails means that it is unclear whether the test case has passed or failed. Such cases are termed as false failures or False Fails.

False failures are one of the major challenges in automation testing. It not only undermines the value of automation, introduces a tremendous amount of effort to triage the failures but also causes loss of trust and confidence in automation. False failures can range from 0% to 100% of the Fails that are seen in an automation execution result.

There are various reasons that can cause false failures in the automation results. The most common ones are listed below.

- Script interaction with Browser:

To simulate the end user scenario, automation tool and scripts have to interact with the browser. In the case of selenium, the selenium webdriver accepts the commands from the script and sends them to the browser. Commands can be of any type, for example, to click on a link/button or to get the text of a specific element. There might be instances when page load speed in a browser is slow depending on the internet speed. By the time the browser receives the command, requested page is not fully loaded. In such cases, the browser will not be able to perform expected action and Selenium will throw a Timeout exception. This is a classic example of false failures in browser based applications. - Locator changed: Locator is a unique identifier that helps scripts to identify a specific element on the web page. It is used to send commands to the browser to perform specific actions. Browser can perform action only if it is able to locate a particular element using a unique identifier. Unique identifier can be id, name, css or xpath etc. While fixing bugs or introducing new html attributes or styles, there is a chance that the developers end up updating the identifiers or introducing new elements whose identifiers are not identified by the automation scripts. This causes automation to fail in identifying the unique elements. In such cases, a failure is reported.

- Introduction of New features or behaviour : Failure to keep automation scripts updated as and when new features or behaviours are introduced is a sure shot recipe to false failure reporting. Existing test cases may fail in case of outdated script execution, even though they are working as expected. This may happen as direct impact of changes in some intermediate steps. For example, in e-commerce site, original expected result for clicking on search was to show results on the same page. But as a result of modification, the search results are shown in a new tab. If automation script is not updated to reflect this, all the test cases post search results will fail, even though the rest of application may be exactly the same.

- Dynamic Behavior of application: There are many applications especially in e-commerce world where real inventory is used for testing. For example, in the case of airline booking, while selecting the flight, upgrade options are dependent on the availability of the upgrade. There might be a case where different people opt for same upgrade at the same time. At the time of testing, there is a chance that an end user is able to upgrade and book a flight even when the upgrade was given to someone else. Due to the dynamic nature of data, it may cause false failure. Some complexity level can be handled at script level but correctness can’t be 100%.

The Problem with False Failures – The Never Ending Regression Problem

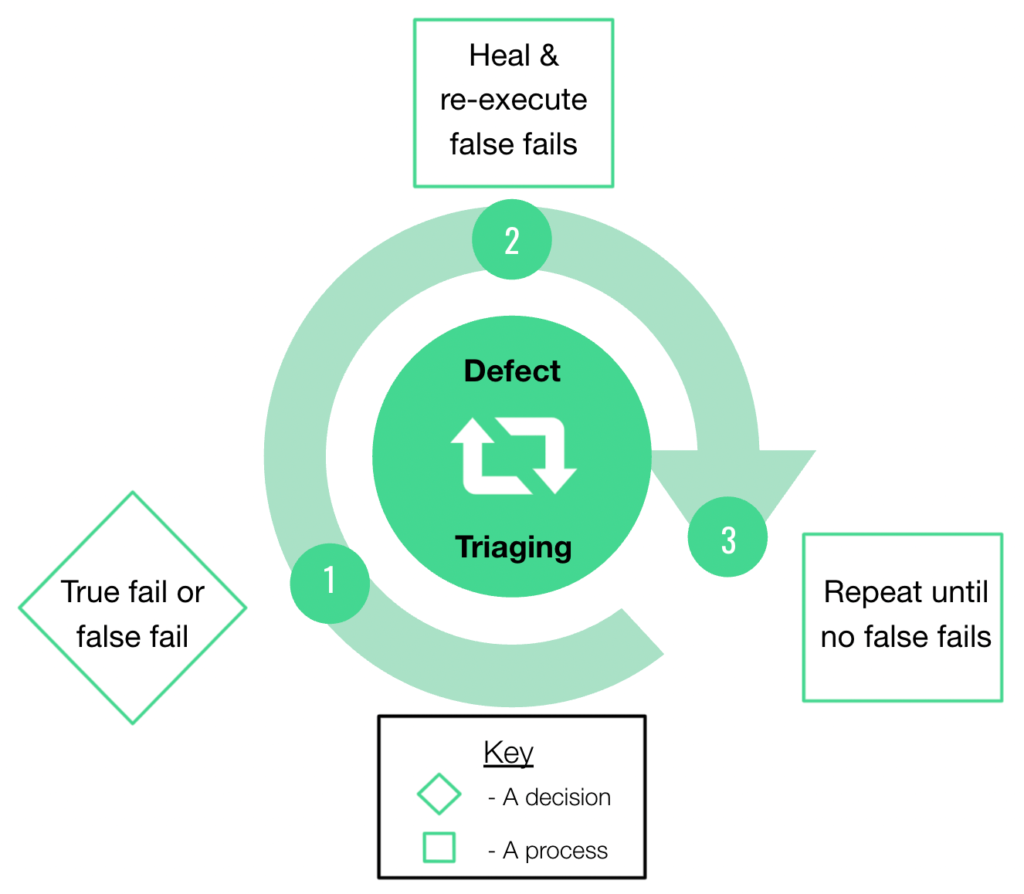

The problem with False Fails is that it requires a time consuming or sometimes never ending repetitive three steps process to be carried out in order to complete the regression. The intent of a regression is supposed to produce a binary result. Did the test case True Pass or True Fail? The problem is that the output of a typical regression is also composed of a bunch of “I don’t know” False Fails . The three step process needed to rectify this is :

- Detect which test cases are True Fails and which ones are False Fails.

- Re-execute testing for all False Fails.

- Repeat until there are ONLY True Passes and True Fails.

It’s The Repeat Until There are Only True Passes and True Fails Steps That Causes The Endless Loop.

Triaging and analyzing false failures can be a very time consuming and cumbersome process. Each test case’s triage can take between 30 minutes to 2 hours This depends on multiple factors that are listed below:

- How well test scripts are maintained?

- How well engineer who is triaging knows the feature change?

- Does the engineer have a handy automation execution log?

- Is there any screenshot or video of the automation execution?

- Does the engineer have past execution history of the execution?

- In which environment that script was created and executed?

- What changed from last version to this version of software?

- What is the possible cause?

At the end of the day, having false failures undermines the value of automation.

Retest The False Fails:

Once a False Failure is detected unfortunately the problems for the regression are only starting. The path taken to truly validate the test case is dependant on the type of failure :

- Change in Feature functionality of the product due to a new feature or bug fix

a. Test case has to be updated

b. Automation script has to be updated - Automation script failure

a. Automation script has to be updated

In many cases, after the triage, an automaton fix might not be possible in a reasonable timeframe. In such cases the engineer has to execute the scenario with an alternate execution method such as manual or crowdsource in order to understand the issues, if any, in the new software build. Either way whether there is an automation update, manual test or crowdsource run the results once again need to be analyzed to ensure that there are no further False Fails. If too large a time window passes during this stage there is a high probability that software has already been updated. Thus the regression was never fully completed and the regression system is in a perpetual catchup mode with the output from development.

How Webomates Handled it Using AI ?

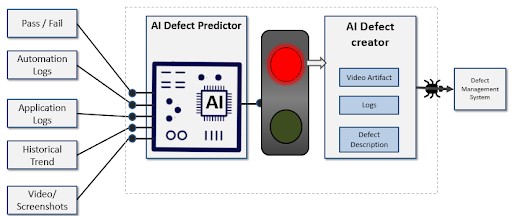

Webomates has its own automation platform and grid on AWS and has been executing thousands of test cases on a daily basis. Webomates has developed the AI Defect Predictor to overcome the challenges posed by False Fail’s in automation. AI Defect Predictor not only predicts True Failures vs False failures, but also helps to create a defect using AI engine for True Failures.

AI

Defect Predictor and Defect Creator have significantly reduced the

Webomates team’s triage and defect creation time from 23 hours to 7

Hours for 300 automated test case failures with a 40% failure rate.

If you are interested in learning more about our AI Defect Preditor and Webomates CQ please click here and schedule a demo or reach out to us at info@webomates.com

Read Next –

Test Automation vs Manual Testing

Exploratory testing in software testing

Tags: Automation Testing, False positives, Fase Fail, Selenium Testing, True Fail

Leave a Reply