Accelerate Success with AI-Powered Test Automation – Smarter, Faster, Flawless

Start free trialPerformance testing is a non-functional testing technique that exercises a system and then , measures, validates and verifies the response time, stability, scalability, speed and reliability of the system in production-like environment.

It may additionally identify any performance bottlenecks and potential crashes when the software is subjected to extreme testing conditions. The system can then be fine-tuned by identifying and addressing root cause of the problem.

The main objective of Performance testing is to compare behavior against system requirements. If performance requirements were not expressed by stakeholders, then the initial testing effort will establish baseline metrics for the system, or the benchmark to meet in future releases.

Different applications may have different performance benchmarks before the product is made available to the end user. A wide range of Performance tests are done to ensure that the software meets those standards.

This article is focused on some commonly used Performance testing techniques and the process involved in testing an application.

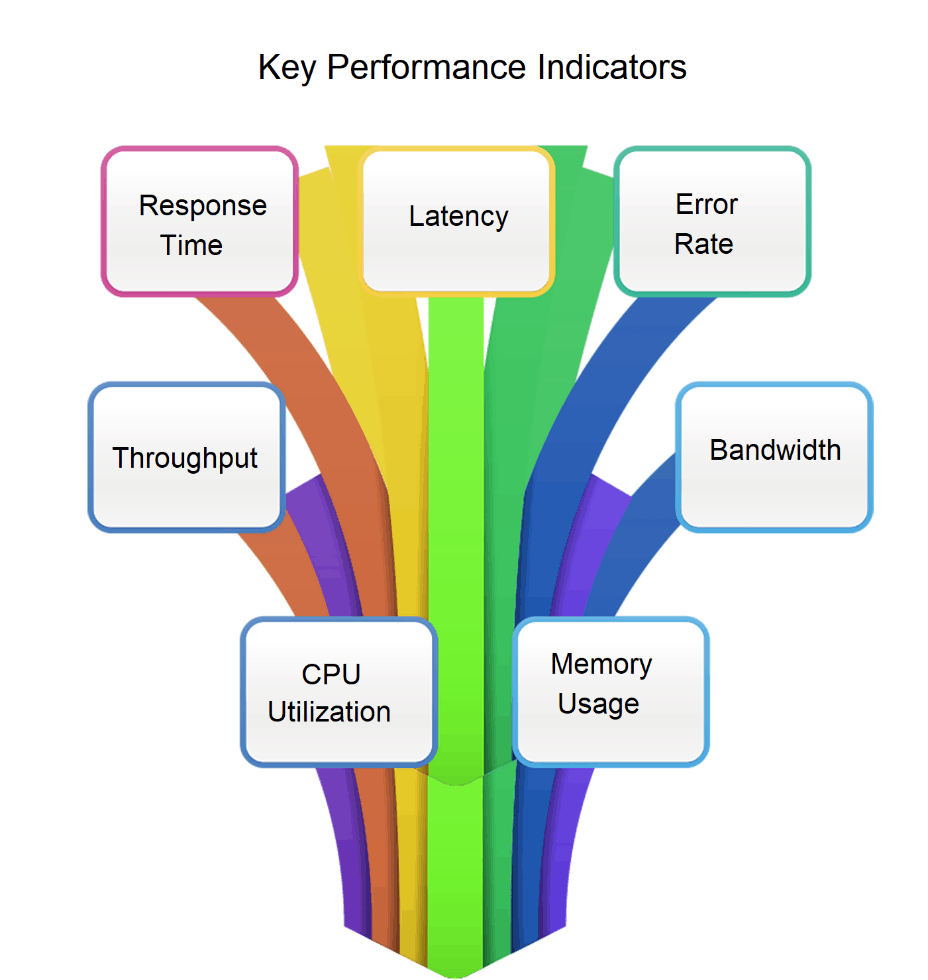

Performance Testing Metrics

Every system has certain Key Performance Indicators (KPI’s) or metrics that are evaluated against the baseline during Performance testing.

Some of the commonly used Performance Metrics are described below.

- Response time: Response Time is the time elapsed between a request and the completion of request by the system. Response time is critical in Real Time Applications. Testers usually monitor Average Response Time, Peak Response Time, Time to first byte, Time to last byte etc.

- Latency: Latency is waiting time for request processing. It is time elapsed between request till the time first byte is received.

- Error Rate: Error rate is percentage of requests resulting in errors as compared to total number of requests. Rate of errors may increase when application starts reaching its threshold limit or goes beyond.

- Throughput: This is the measure of number of processes/transactions handled by system in a specified time.

- Bandwidth: Bandwidth is an important metrics to check network performances. It is the measure of Volume of data per second.

- CPU Utilization: This is a key metrics which measures the percentage of time CPU spends in handling a process. High CPU utilization by any task is red flagged to check any performance issues.

- Memory Usage: If the amount of memory used by the process is unusually high despite of proper handling routines, then it indicates that there are memory leaks which need to be plugged before system goes live.

Based on the type of application being tested, the technical and business stakeholders select which metrics need to be checked and accordingly attach priorities to them. Unlike functional requirements, performance requirements are not binary. The metrics are usually expressed with a target percentile, and sometimes combined with other metrics. For example, latency might be expressed with throughput: response time must be less than 500 milliseconds for 90% of responses at a throughput of 10 requests per second.

Note that since CPU and Memory usage are typically not visible to system users, they may not be selected for targets, but are typically collected as indicators of problems and limits.

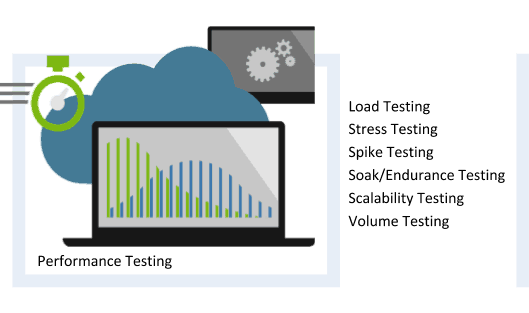

Types of Performance Testing

Performance testing is usually conducted to test a particular performance metrics as specified by the stakeholders (Customers, Designers, and Developers) in the requirement document.

Every metric has its own importance from technical or business point of view.

Following diagram represents broad classification of various types of Performance Testing.

- Load Testing

Load testing is a type of Performance testing which helps in determining an application’s performance under increasing workload till it reaches its threshold limits. It checks the reliability of the system in terms of consistent performance when it is subjected to high load. Load testing helps in identifying the bottlenecks in the system. It helps in identifying the maximum sustaining capacity of the system.

- Stress Testing

Stress Testing, also known as Fatigue Testing, is type of performance testing to check software’s performance and stability when it is tested beyond its tipping point, i.e. defined maximum load limits. Stress testing also checks for effective error management and system recovery procedure in case failure due to excessive load on system.

- Spike Testing

Spike Testing verifies system’s performance when there is an extreme variation in load with sudden increase or decrease, also known as spikes. Spike testing takes into account the user count and complexity of the task being performed. Spike testing also keeps track of recovery time between two spike activities.

- Soak/Endurance Testing

Endurance testing, also known as Soak testing or Capacity Testing, tests system’s ability to perform under continuous load over an extended period of time. It is performed to determine system’s robustness. Endurance Testing monitors metrics like memory utilization to detect any leaks, overflow problems or any other performance degradation issues.

- Scalability Testing

Scalability Testing is a type of Performance testing which checks the adaptability of the system as per the changing needs. It tests the ability of software to scale up/out or down/in when it is subjected to changing performance attributes (or metrics).

- Volume Testing

Volume testing, also known as Flood Testing, is used to check efficiency of a software when it has to process huge amount of data. It checks the system behaviour and identifies the bottlenecks in the system when subjected to voluminous data processing. It is mainly used for Database related testing. It checks for the DB Response time, correct data storage, data loss, data integrity, memory usage etc.

You May Like to Read

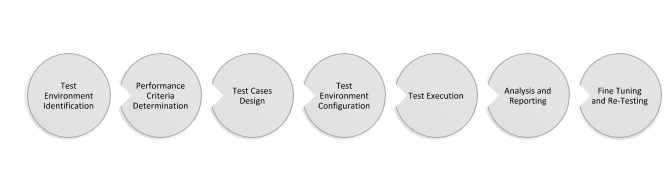

Automate testing for your web application in THREE days. Start Free Trial NowPerformance Testing Process

Performance testing process involves certain basic steps as depicted in following diagram.

- Identification of Test Environment

The first step in Performance testing involves identification of testing environment which includes hardware, software, network configuration, database setup etc. In a perfect world scenario it is ideal to test in target production environment. But if that is not possible then intermediate testing can be done at a simulated environment same as target environment. Though this may be a costly option. If cost is a constraint, then a subset environment with similar or proportionally lower specifications can be set up for testing purposes.

- Determination of Performance Criteria

Every application has its own performance criteria which have to be identified during requirement specification stage of SDLC. The testing metrics are based on these criteria. Those metrics may include response time, resource utilization, throughput etc.

- Test Cases Designing

Test cases have to be designed keeping in mind that software will be launched in a real world to be used by different users with different style of usage. The cases should include excessive loads, spikes and large data volumes. It is vital to plan for all possible scenarios for comprehensive testing.

- Configuration of Test Environment

Test environment framework has to be configured with relevant software, hardware, third party softwares, network setup, database setup, testing tools, debugging tools etc. It is important to set up the environment as per the guidelines because any issues here may have adverse effect on testing results, rendering the whole process unproductive.

- Test Execution

Execute the test cases as per the test plan, monitor and record the results.

- Analysis and Reporting

Test reports include conformance to requirements (or baselines) as well as secondary metrics on system resource utilization, e.g. cpu, memory and disk.

- Fine Tuning and Retest

Based on test reports, the software can be fine tuned by the development team to perform better. Once the changes are done and redeployed, the test execution is done again followed by analysis and reporting of results. This cyclic process continues till the margin is within permissible limits as per the stakeholder requirements.

Conclusion

Performance testing helps testers and developers in identifying areas of improvement to deliver a robust and efficient product to the end users.

Performance testing report and analysis aids the stakeholders to understand the functioning of the product in the test scenario. They can accordingly make strategic business decisions on improvements before it is launched in the market.

If you like this blog series please like/follow us @Webomates or @Aseem. And of course if you are interested in learning more about our service Webomates CQ here’s a link to request a demo.

Read Next –

Black box testing vs white box testing

5 Best Practices for Continuous Testing

continuous testing tools in devops

Requirement traceability matrix

Leave a Reply